7.4. Solutions#

Solution to Exercise 7.3 (Inferring galactic distances)

A fixed value for \(H\) can be assigned with the PDF \(\pdf{H}{I} = \delta(H-H_0)\), where \(\delta(x)\) is the Kronecker delta. We note that integrals over a delta function are given by \(\int_{-\infty}^{+\infty} f(x) \delta(x-x_0) dx = f(x_0)\) such that

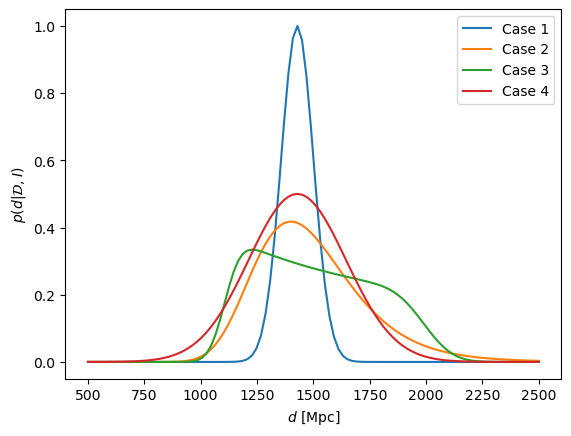

\[ \pdf{d}{\data,I} \propto \exp\left( - \frac{(v_0 - H_0 d)^2}{2\sigma_v^2} \right), \]where we have ignored all normalization coefficients.

With a Gaussian prior for \(H\) we will be left with an integral

\[ \pdf{d}{\data,I} \propto \int_{-\infty}^{+\infty} dH \exp\left( - \frac{(H-H_0)^2}{2 \sigma_H^2} \right) \exp\left( - \frac{(v_0 - H d)^2}{2\sigma_v^2} \right). \]We can perform this integral numerically, or we can solve it analytically by realizing that the product of two Gaussian distributions is another Gaussian distribution.

The uniform prior for \(\pdf{H}{I}\) implies that the final integral becomes

\[ \pdf{d}{\data,I} \propto \int_{H_0 - 2\sigma_H}^{H_0 + 2\sigma_H} dH \exp\left( - \frac{(v_0 - H d)^2}{2\sigma_v^2} \right). \]

For numerical integration, and plots of the results of the three inference strategies, see the hidden code block below.

Solution to Exercise 7.4 (The standard random variable)

The transformation \(z = f(x) = (x-\mu)/\sigma\) gives the inverse \(x = f^{-1}(z) = \sigma z + \mu\) and the Jacobian \(|dx/dz = \sigma|\).

Therefore \(\pdf{z}{I} = \pdf{x}{I} \sigma\). With the given form of \(\pdf{x}{I}\) we get

which corresponds to a Gaussian distribution with mean zero and variance one, sometimes known as a standard random variable.

Solution to Exercise 7.5 (The square root of a number)

With \(z = f(x) = \sqrt{x}\) we have \(x = f^{-1}(z) = z^2\) such that \(|dx/dz| = 2|z|\). We note that \(z\) is positive such that \(|z| = z\) and we therefore have

and 0 elsewhere.

We check the normalization by performing the integral

Solution to Exercise 7.6 (Gaussian sum of errors)

The PDF \(\pdf{Z}{I}\) is Gaussian with mean \(\expect{Z} = z_0 = x_0+y_0\) and variance \(\var{Z} = \sigma_z^2 = \sigma_x^2 + \sigma_y^2\), where \(x_0,y_0\) and \(\sigma_x^2, \sigma_y^2\) are the means and variances of \(X\) and \(Y\), respectively.

This is the same result as in Example 7.1 which should not be surprising since the errors were in fact Gaussian.

Solution to Exercise 7.7 (Gaussian product of errors)

The PDF \(\pdf{Z}{I}\) is Gaussian with mean \(\expect{Z} = z_0 = x_0 y_0\) and variance \(\var{Z} = \sigma_z^2 = y_0^2 \sigma_x^2 + x_0^2 \sigma_y^2\), where \(x_0,y_0\) and \(\sigma_x^2, \sigma_y^2\) are the means and variances of \(X\) and \(Y\), respectively.